In order to implement the visualization part of Human-Like AI, it is necessary to think about how to create and move a 3D human model.There are various existing approaches, but one of them is the Video Inference for Vibe (VIBE) announced at CVPR 2020 at the Max Planck ETH Center. Body Pose and Shape Estimation).

There are many ways to represent human models in 3D, but the Skinned Multi-Person Linear (SMPL) model is a vertex-based parametric model created from thousands of body scan data. VIBE is basically an algorithm that generates parameters of the SMPL model by estimating the body pose and shape from the image.

The implementation of VIBE is open source, and demo code and learning code are implemented in PyTorch. As one of its main features, it is made to work for random images in which a large number of people appear, so it is highly versatile. Also, while most similar algorithms are difficult to implement in real time, this algorithm is said to be capable of processing at 30 FPS on the RTX 2080Ti. The advantage of the demo code is that it can be easily applied because it can directly create a file in a format supported by most 3D graphic software such as FBX.

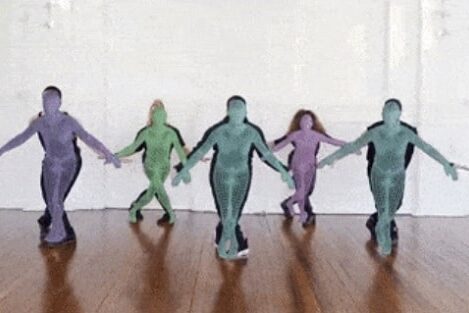

Here's a public demo video and a link to github: