It is thought that GPU is essential when learning deep learning algorithms, but when serving after model training is complete, CPUs are often used instead of GPUs. For example, Roblox reports that after some optimization, even a heavier model like BERT inferences a 32-core Xeon six times faster than a similarly priced V100. This means that the cost-performance ratio is 6 times higher.

However, if you look a little more at the above numbers, I think it's not a fair comparison. In the case of the 32-core Xeon, it is divided into multiple cores and has an independent cache, and has an advantage in terms of memory bandwidth usage, whereas the V100 is eventually one GPU, so when executing multiple inferences at the same time, in terms of cache and memory bandwidth. This is because it “competites” and cannot get the maximum performance out.

Since Inference uses much less memory than training, it is possible to run multiple inferences at the same time even when using a single GPU. If you actually test it, you can see that the execution speed decreases significantly as the number increases. (For fully parallel, the speed should be the same as long as you are within the memory limit. For example, increasing the batch size in one inference will reduce the speed significantly)

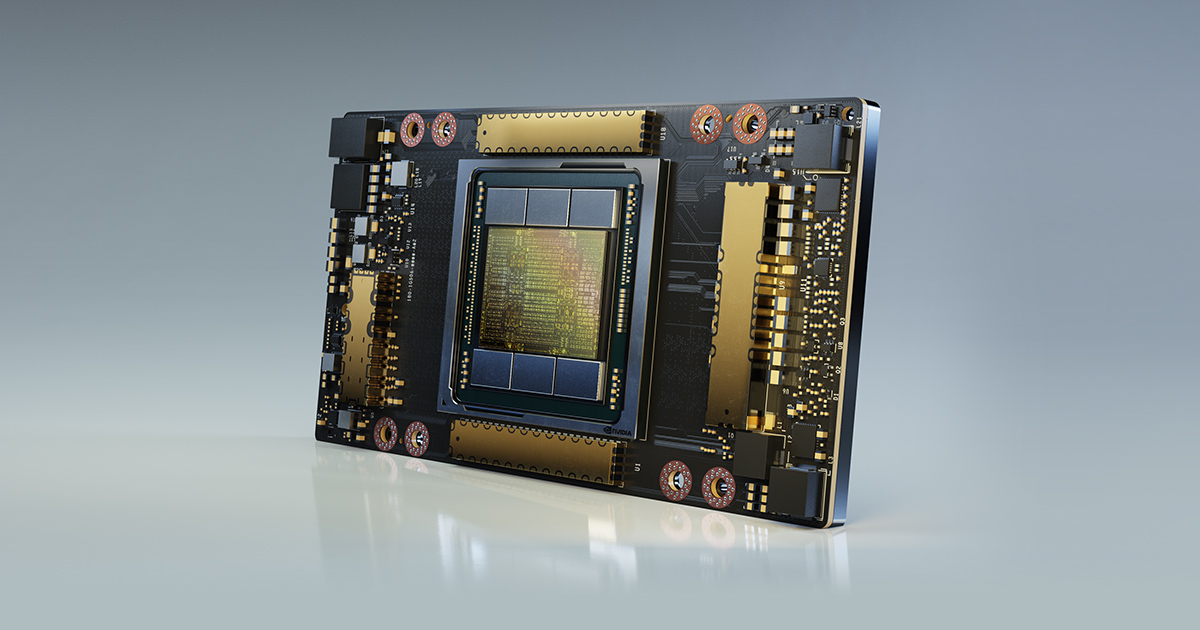

The Multi-Instance GPU technology introduced at Ampere, NVidia's new GPU structure, seems to be able to overcome this limitation. One GPU can be divided and used as a logically independent sub-GPU, up to seven, and each sub-GPU is allocated cache and memory bandwidth separately. It is expected that the performance will improve significantly in actual inference. In addition, the 19-bit format TP32, which is an intermediate form between FP16 and FP32, has been newly added, which is said to have improved performance by about 6 times. (This is not a lossless improvement as this is a guarantee of sacrifice of precision)

Assuming roughly the combination of MIG and TF32 to achieve a 20x (!) performance increase, the GPU is about 3x more efficient by overturning the 6x more efficient CPU in the previous Roblox example. Then, it can be said that the competitiveness of the GPU will be gained in serving, but... When using a cloud instance, the unit cost of a GPU machine is more than three times higher than that of a CPU machine. It would be difficult to say that it is still better. The RTX 3090 24G will be released in the second half of the year, so I would like to buy it and do various tests. (MIG, TP32 Effectiveness Verification) Link is NVidia Ampere Architecture introduction: