There have been many attempts to convert code written in one programming language into another, and there are many types of commercial tools. The main purpose of use is to ensure compatibility. For example, there are use cases such as converting code written in legacy programming languages such as FORTRAN, BASIC, or Python 2 to C++ or Python 3.

The method that has been used so far is to structure it using a syntax tree, and then convert it by applying rules made by experts who are familiar with both programming languages. This method sometimes does not work well, and even if the conversion is successful, the resulting code quality is often low.

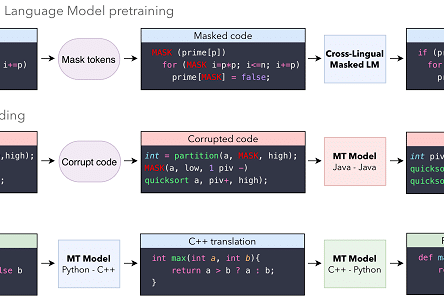

If you think about the field of natural language translation, translators based on neural network models outperformed rule-based translators. However, it usually requires a large amount of data with the mapping of the original language to the target language representation, and it is very difficult to obtain such data for programming languages. In this study, unsupervised learning was fully introduced to solve this data shortage phenomenon, and in particular, masked language model pretraining, which is widely used in natural language models, is applied.

Below is a link to Facebook TransCoder's github repository and paper that translates between Java, C++, and Python. Pre-trained model and test data are also included, so you can test it right away. According to the test results of the paper, despite being unsupervised, it exceeds the performance of commercial programs for the same purpose.

converts source code from a high-level programming language (such as C++ or

Python) to another. Transcompilers are primarily used for interoperability, and

to port codebases written in an obsolete or deprecated language (e.…