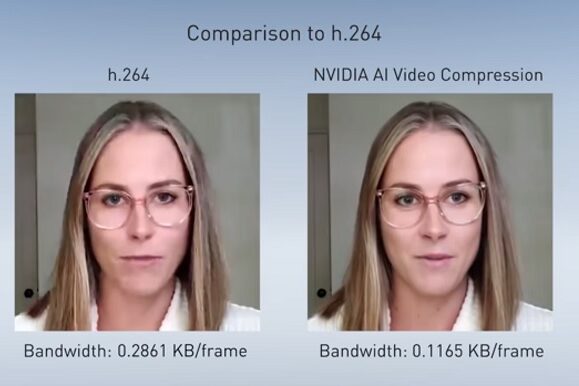

NVidia unveiled a cloud-based video communication platform called Maxine. Maxine's feature is the full introduction of AI technology. Specifically, it is not a method of compressing and sending facial images with an image coding technology such as H.264, but detecting and transmitting facial landmarks, and the receiving side uses GAN to generate. It is said that the bandwidth could be reduced to 1/10 compared to H.264.

About 25 years ago, in the early days of video communication, face model-based video compression technology appeared briefly, but it was not possible to obtain sufficient image quality for commercial services, and in the case of video communication, the required bandwidth was not large (because there is little movement) Until now, each pixel of the image is lossy compressed and transmitted. However, with the advancement of AI technologies such as GAN and the explosive increase in the demand for video communication due to Corona 19, bandwidth reduction has again become a significant requirement. However, since the amount of computation (or power) required for real-time facial landmark detection and video generation is much higher than that of H.264 encoding/decoding processed by H/W, I wonder how this was handled. (Network cost vs. encoding/decoding cost)

Maxine provides not only facial image transmission, but also other functions. It includes a number of unit technologies that have appeared in the AI field in recent years, such as the function of detecting human outline and setting the background randomly, real-time multilingual conversation combining speech recognition and translation, noise removal, and image quality improvement through super resolution. It is always a pleasant experience to see various unit technologies combined to form a single service form^^