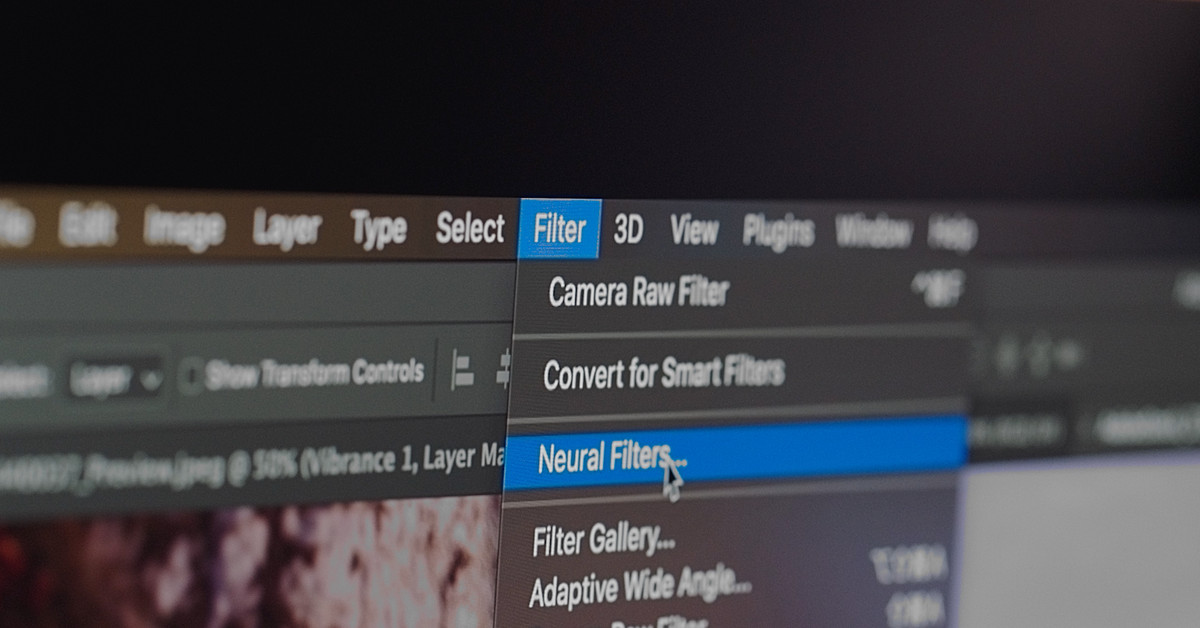

Adobe announced an AI-based editing tool called neural filter. Some say it's already included in the latest version of Photoshop.

Examples of functions include changing the whole sky of a photo to another sky (clear sky, sky with clouds, etc.), selecting an object edge based on AI, and editing age, facial expressions, emotions, etc. with a face photo. And so on. You can even change the viewing direction, edit the thickness of your hair, or add glasses. They are said to be basically composed of technologies based on GAN.

If you look at the link we shared, there is a more detailed explanation, but not all problems have been solved, and it seems that for example, issues arising from bias in the training data are viewed as important tasks. (Skin color, etc.)

I feel that the concept of "editing" is gradually evolving into a concept that deals with the content itself and creates it by necessity, not modifying digital pixels. This is also possible because there is AI technology.