[Prior Research Team Yoo Hee-Jo]

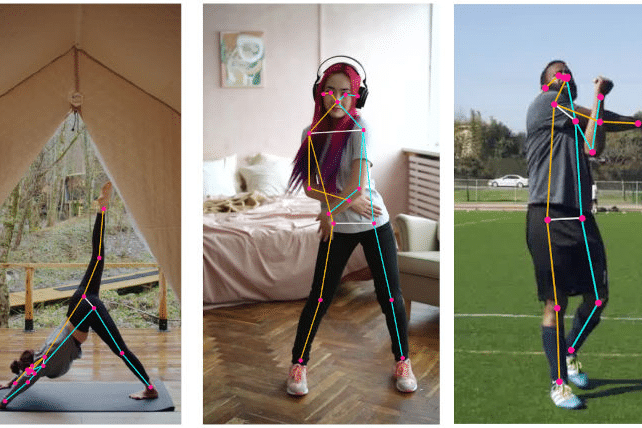

Pose estimation is one of the visual processing techniques that track the movement of characters in a video. Body landmarks, which are similar to facial landmarks, are extracted and connected to describe the posture of the entire body. In most cases, the goal is to guess the subject's pose by receiving video, especially real-time cam data, rather than a single still image. In that respect the previous Posts on HyperGANAs mentioned above, as a video-based model, it is becoming increasingly common to reduce the weight of pose estimation as much as possible for reasons such as cost and latency, and then operate it locally.

Google Research unveiled MoveNet's API, a lightweight pose estimation model with TensorFlow.js. We've unveiled two variations of the speed-centric version of lightning and the accuracy-centric version of thunder, both of which guarantee more than 30 FPS in modern desktop and laptop environments. Mobile officially reports that it is over 30 frames on the iPhone/12 frames on the Pixel 5. As a result of testing the official demo site on its own mobile device (Galaxy Z Fold 2), the lightning version is about 15 FPS and the thunder is about 10 FPS.

The research team seems to plan to use MoveNet with a focus on healthcare, such as hospitals, insurance companies, and the military.

For more details, see the link below which Google officially posted on MoveNet.

Links:

https://storage.googleapis.com/tfjs-models/demos/pose-detection/index.html?model=movenet