[Prior Research Team, Kim Moo-seong]

Eternal sunshine

'Eternal Sunshine', directed by Michel Gondry, is a romantic sci-fi film about memories and parting with Jim Carrey and Kate Winslet as a couple. In the movie, there is a technology that can erase memories. The main character, Jim Carrey, had a fight with his girlfriend and soon regretted it and went to see her to reconcile. However, he discovers that his girlfriend, Kate Winslet, has erased the memories of dating him. Jim Carrey also asks for a procedure to erase his memories of her. And…

We are making artificial seahorses

What movie story? If that's the case, it's because what I'm going to introduce today is one of DeepMind's recent papers on 'Agent Memory System'. It is a fact that everyone admits that DeepMind is a research group that wants to give artificial intelligence a thing called 'memory' that is not unique, but almost exclusively. Even Demis Hassabis, the head of DeepMind, who visited Korea during AlphaGo, said publicly that he was conducting research to artificially imitate the 'hippocampus', which was considered very close to 'memory' in the human brain.

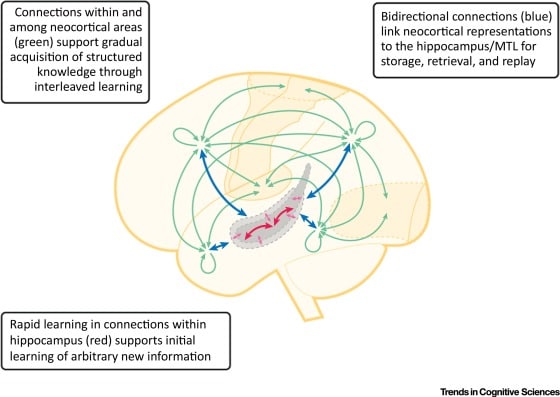

Since then, DeepMind has been steadily proposing an 'artificial intelligence memory system' inspired by the mammalian hippocampus. Complementary memory system considering that the fast pattern memory part of the hippocampus and the slow generalized memory part of the cerebral cortex work simultaneously ), an episodic memory system, a memory system that encodes predictions, a dual encoding memory system that separates the modal of the content to be memorized, and a generative model based on the point that memory is 'generation' reflecting experience instances, not storage-retrieval. memory system and so on. All of them were proposed by applying the latest deep learning models at the time of publication of the thesis in engineering, while reflecting certain aspects of human memory.

Mentally time-travel

In Eternal Sunshine, a man (Jim Carrey) visits a doctor who claims to erase his memories. You'll be tasked with putting on a weird device on your head and thinking of a bunch of things about her. And the moment the memory is recalled, the doctor (setting) explains that a specific memory can be erased by giving an electromagnetic shock to the activated brain regions, and the memory removal procedure begins soon.

The man who has fallen asleep is looking at a scene in his memory as if in a dream. A moment of quarrel between him and her that reached the point of parting. I'm looking at it gloomy, and the scene gets darker and I fall back to some point in the new past. It has been deleted!

Doesn't it feel like a time traveler? – You can only go to the past – Jim Carrey travels through his memories like this. It's a very entertaining cinematic device, but surprisingly, cognitive scientists also describe certain aspects of memory as a metaphor for time travel.

Memory can be described in several aspects, among which, in terms of how it is stored, it can be divided into 'episodic memory' and 'semantic memory'. Among them, episodic memory is a memory constructed around one's personal events. Remembering 'the moment I first rode a bike' and 'the day I went on an overseas trip' is different from remembering 'the day Yi Sun-shin was born' and 'knowledge of the historical meaning of Liberation Day'. Psychologists have called this episodic memory mentally time-travel, in the sense that it is like traveling in time to a specific point in the past. In the movie, the man keeps witnessing scenes (memories) of dating her. At this time, some knowledge of 'I went to a restaurant with her' does not come to mind, but the sound, music, taste, smell, speech, face, and emotion of the moment I was with her.

HTM

Now, finally, an introduction to the thesis. a joke before that. In the paper that will be introduced here, DeepMind calls its memory system HTM for short. If you're interested in biologically inspired artificial intelligence, doesn't something come to mind? Yes, the model that a research group called Numenta has been pushing for a long time is also HTM. However, Numenta's HTM is HTM (Hierarchical Temporal Memory), and the HTM of this DeepMind thesis is HTM (Hierarchical Transformer Memory). Still, Numenta, who is my predecessor, must have been unaware of the abbreviation that I had been wanting for a long time. (https://discourse.numenta.org/t/why-is-htm-ignored-by-google-deepmind/ 2589), but looking at these points, it seems like they are keeping some distance (you know it's a bit of a joke, right?).

This is a long digression, but I wanted to show you the difference between DeepMind's existing cognitive science and inspiration-based AI research group, so I wrote it a little bit about it. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . There are a number of differences, but it may basically be a reflection of DeepMind's strategic cleverness. They want to create artificial intelligence systems based on biological (neuroscience, to be precise) inspiration. But I'm not trying to preserve the biological details. Its predecessor, Numenta's HTM, strives to faithfully reflect the phenomena and limitations revealed in many practical neurosciences. However, DeepMind considers the system aspect more important than that. We take the structural aspect of the memory system a little more, and try to build the details by properly stacking the machine learning modules at this point like Lego blocks. So, the HTM introduced this time has been improved based on the hottest transformers these days.

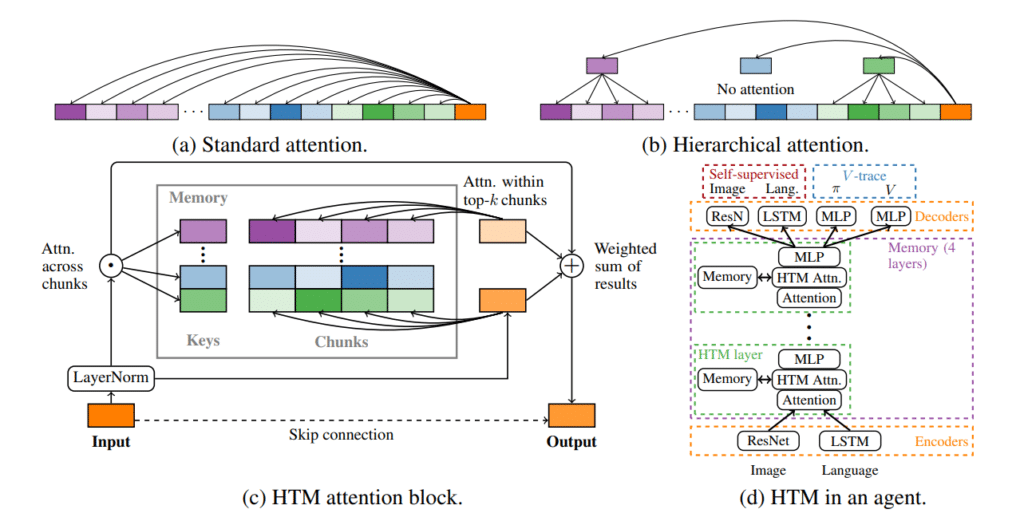

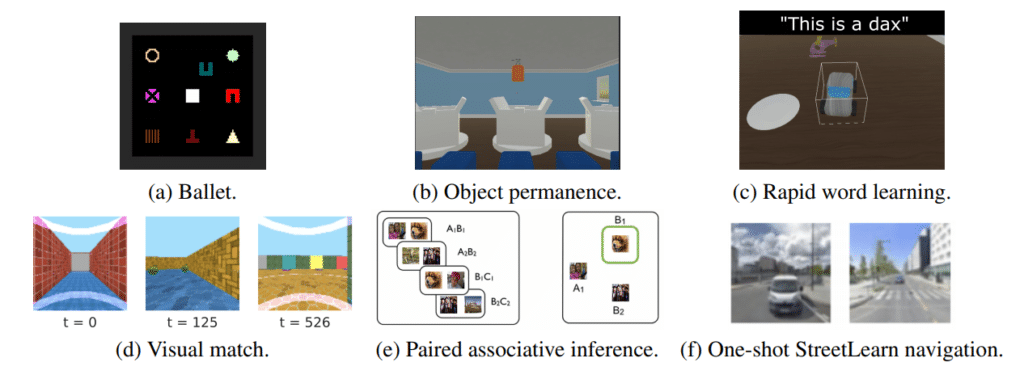

Towards mental time travel: a hierarchical memory for reinforcement learning agents

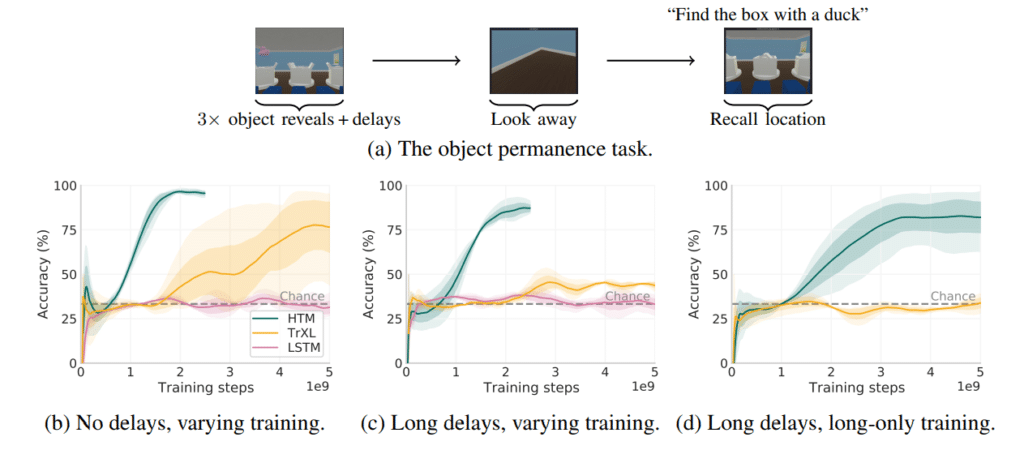

The keywords of this paper are: Reinforcement learning agent, episodic memory, hierarchical attention transformer, memory span (chunks). The issue is as follows. There have also been quite a few studies trying to incorporate a memory system into a reinforcement learning agent. However, most of them tended to fail when they were given a new assignment or mixed elements of confusion. Interference of past learning experiences by new learning events or similar tasks is a well-known weakness of deep learning-based models in particular. And there have been quite a few studies that tried to use the transformer as a memory module for reinforcement learning agents. Until then, it was implemented using LSTM, but I thought that using a better transformer would improve performance. However, it was reported that the transformer, which was quite efficient in other areas than expected, was not good in the reinforcement learning situation. To overcome this, the structure of the transformer is improved. But it didn't improve much. I also thought that the flexibility of self-attention would allow me to remember and handle a longer past (long sequence of events) efficiently, but it didn't work as well as I thought.

This paper proposes two reasons for this. One is that the 'past to be remembered' needs to be broken down into more chunks. Memory is not simply a sequence of events. Several discontinuous episodes are intermingled. As Jim Carrey jumps through scenes from his past in the movie Eternal Sunshine, the argument in this paper is that the past should be stored in the form of a certain chunk and jump between necessary chunks.

The other is that, once the relevant chunks of these shattered pasts are selected, we only need to pay attention to the details. In other words, don't we quickly scan the video while looking at the thumbnails, and when we are interested, we stop at that point and play it slowly? Anecdotal memories should be approached that way.

So, let's configure hierarchically with an attention (higher attention) that works based on a 'summary of the past' divided into chunks and an attention (lower attention) that looks at the details of the chunk. is the core of HTM. And to test its performance, visual/verbal (text) stimuli are given in a Unity-based virtual environment and several memory tasks are given. The results are better or more efficient than existing models.

Airplanes do not mimic the flapping of a bird's wings

Interesting paper, but did these results advance the agent's 'memory system'? If so, it is difficult to answer with certainty. In the meantime, DeepMind has consistently proposed a memory model, but the ripple effect in reality was not as great as I thought. The reason may be that, fundamentally, granting 'memory' to artificial intelligence is a difficult problem to solve. But there seems to be another reason. Although DeepMind has tried to avoid the mistake of directly transferring 'biological inspiration' to its predecessors, they are still closer to their predecessors than to the more recent pragmatic research group. As DeepMind admits in a paper, they say that their memory model performs better, but is too complex to scale to a large-scale system or to work with further refinements by fellow researchers. So, this thesis also pursues simplicity to some extent, and I tend to turn to a method that makes practical use of transformers. But still, I don't think there's anything more impressive than a strategy that builds up a simple structure like OpenAI's GPT-3 and builds it up and pours data on a formidable scale. It is a valid argument that certain human mental functions that AI would like to emulate and acquire have structural aspects. However, it seems that it is not yet proven whether it is justified to solve it through a specific structure (I personally support this more). For now, we have no choice but to acknowledge and observe both directions.

Eternal light that shines upon a blameless heart

On the academic side of artificial intelligence, 'memory' – in particular, what would be the benefit of coming up with a solution in the way we introduced today? More complex and longer problems can be solved by artificial intelligence. It can also better solve meta-learning or pew-shot learning tasks. You can build a model that continuously learns and improves complex problems.

But also 'memory' is an important element of human beings. It is a key element that defines 'me' and 'relationship'. In the movie Eternal Sunshine, the protagonist wants to stop the memory while it is being erased. Since the most recent memory is deleted, at first, the deletion takes place mainly on the bad things and emotions right before the breakup. However, as the deletion progresses further into the past, the scenes he meets are faithful memories with joy and excitement, meaning and sympathy. Now it's not just about the other person, it's an important part of yourself.

So, the original title of the movie seems to be 'Eternal sunshine of the spotless mind'. Because 'memory' will illuminate us forever, and there must be a flawless heart in those scenes.

Therefore, I think that the more 'memory' is given to AI, the more likely we will witness a more 'human' AI. That artificial intelligence will grow with us, because it is possible for 'memories' to reflect the moment when we begin to have a 'stainless mind'.

lot of virgins and

A world being forgotten by a forgotten world

Eternal light shining upon a blameless heart,

prayers made

How happy is the wish of resignation

How happy is the blameless vestal's lot?

The world forgetting, by the world forgot.

Eternal sunshine of the spotless mind.

Each prayer accepted and each wish resigned.

References

- Towards mental time travel: a hierarchical memory for reinforcement learning agents – https://arxiv.org/abs/2105.14039