DALL-E, released by OpenAI, is a technology that generates images from natural language text. Previously, there were technologies for the same purpose, such as StackGAN and OP-GAN, but DALL-E has the advantage that the quality of the final result is remarkably excellent because it is made based on GPT-3, a super-scale language model. Share related blog posts.

Here are some examples from the article I shared. First, the input sentence looks like this:

A [store front] that has the word [openai] written on it

Here is the image obtained as output using DALL-E:

On the other hand, if you change the part called openai to skynet in the input image, you can get the following image:

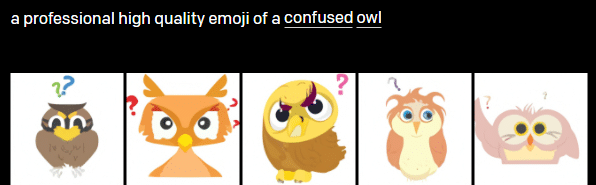

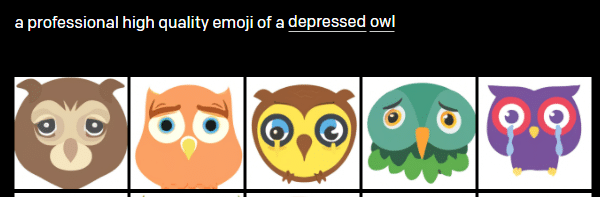

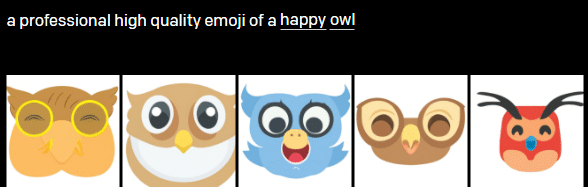

Another example is the creation of emojis with various facial expressions. It's amazing how the generative model behaves in response to subtle word changes as well as the excellent quality of the result:

It is said that DALL-E does not only allow natural language input, but also gives an image with a specific style, and it is also possible to create a result according to the image style. More examples can be found on the shared blog.

GPT-3 has brought revolutionary performance improvements in a variety of natural language processing problems, and especially when it comes to sentence generation, it has made a huge repercussion that it may be an early form of artificial general intelligence (AGI). Of course, it is pointed out that it is far from the way humans think and is different from the essence, but it must be a surprising technological result. DALL-E is also giving the same impact as GPT-3 in the field of image generation. It has far exceeded existing technologies in terms of the diversity and quality of the results, and many further analyzes will be made to assess their potential. In addition, considering that GPT-3 and DALL-E are actually based on the same technology, it can be seen as a technology that can be processed into one without distinction between natural language and images, which is one step further to human intelligence that generally understands multimodal data. It seems clear that it has progressed.