[Service Development Team, Yeji Jo]

When we usually define human-like AI, we are saying that it is an AI that acts like a human and thinks like a human. Although the shape and form of AI that each person expects is different, various attempts are being made so far to realize the AI that many people are pursuing.

AI personification

So what does AI ultimately look like when it looks the most human?

Six years have passed since the match between Lee Sedol and AlphaGo in early 2016, when interest in AI began to grow. There is.

At the time of the Go match between Sedol Lee and AlphaGo, AI won the game that Sedol Lee would have expected to win, and he vaguely considered the AI that would come in the future as an object of fear. Yet, strangely, according to Chang-Hoon Oh, a researcher at Boston College, through his first interactions with AI, he was able to see examples of AI being described as creative or ruthless human beings.

In addition, according to Researcher Oh's experiment, in a situation where AI and humans were drawing together, humans were given the choice of leading or assisting when performing tasks. In this experiment, we can see that people want to take the lead in both the lead and assist functions. In other words, we can see that we expected the AI to only play a supporting role when the human made the final decision. Also, during the process of working together, he was able to see AI personify in the way he talked to, taught, and expressed his emotions.

When AI performs tasks that are superior to humans, ordinary people feel distanced from AI or portray them as beings of fear. It can be seen that in the process of talking with AI, you want to have a more detailed communication rather than a simple conversation.

Perhaps in the process of increasing interaction with AI without giving AI a specific shape or frame in advance, people may be giving AI a different personality through their own experiences.

The essential elements of sympathy: intelligence and emotion

Then I can't help but ask the next question. What elements do AI need most in order to “communicate” with humans?

If we think about the main elements that form human-like AI, we can say that intelligence and emotion are the parts that we pay the most attention to in order to communicate with AI. It can be said that AI, which is as smart as humans and capable of possessing human emotions, is just like humans. This is significantly different from the image of a smart computer that performs tasks faster and more accurately than people that have come out so far, and it is a challenge that we have to solve in the future.

And I think the most representative way to express human-like intelligence and human-like emotions is through conversation.

As a basic form of forming this conversation, you can find many aspects of the industry trying to solve it in a variety of voices and languages. When talking with AI, it would be one aspect to make you think that if you talk with a voice that has the same voice and emotions as a human, you will be more like a human.

In addition, various attempts are being made to use language, which is the core of conversation, in order to speak like a human. In order to understand human language, it is an attempt to grasp and express the emotions contained in the sentence rather than just looking at the meaning, grammatical structure, and context of the language. One example would be GPT-3, a natural language processing technology engine that creates 500-character Chinese sentences by inserting only a few key words to express like a human.

And if you have reached the stage of using human-like lines to some extent, in order to unravel it into more diverse conversations, the next step is to unravel it with memory and conversational material. Take Facebook's Blender Bot 2 as an example. Long-term memory of more than 5 turns was attempted to deeply understand the context before and after and reflect it in the conversation, and you can see that it reflects various conversation materials through real-time Internet search.

If it was one aspect to capture intelligence and emotion through dialogue, what could you try in a different way?

One of the currently active studies is to recognize and interact with people's emotions in order to understand people's emotions from various angles. A typical example is Softbank's Pepper, which analyzes faces and emotions, and the Neurodata lab is also constantly trying to recognize human emotions by analyzing human behaviors and emotions, specifically voice, facial expression, heartbeat, and breathing.

On the other hand, the next attempt to capture human intelligence is AGI. As we study, we often see that the knowledge we have learned in one subject is connected or applied to other subjects or in other areas of our lives. Similarly, in the AI industry, we are now seeking to use one knowledge for a general purpose rather than using it for one purpose.

Universal use means that it can be used in various contexts and environments, can solve problems when faced with new kinds of problems that were not expected, and can be used in other situations by using the experience and knowledge learned in the process of solving one problem. It is also about solving problems.

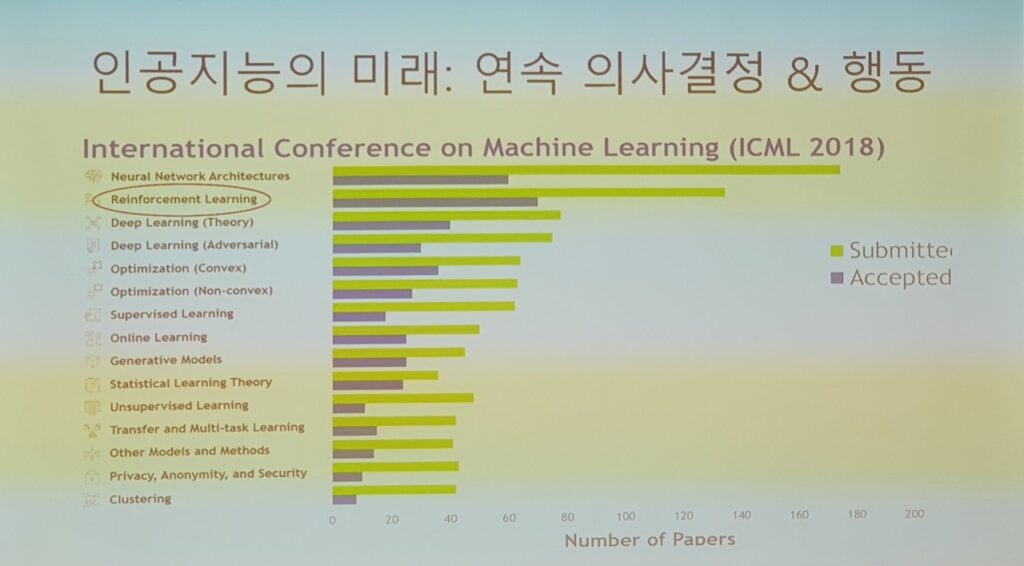

To solve such general-purpose AI, reinforcement learning is usually used. In fact, many experts are paying attention to that if large-scale AI language models are the trend these days, reinforcement learning for decision-making is the next trend. The reason this is possible is that existing reinforcement learning models are trained and designed to perform one task, so they cannot be utilized in other games, or their performance is significantly lowered, resulting in a lack of flexibility. Whereas the existing reinforcement learning scenario was a scenario in which interaction was rewarded to achieve one goal in a given environment, DeepMind's XLand recently developed an integrated platform rather than an individual training environment. is also being done.

AI ethics

If an AI that captures both emotion and intelligence is created like this, I think the last task we need to solve is ethics.

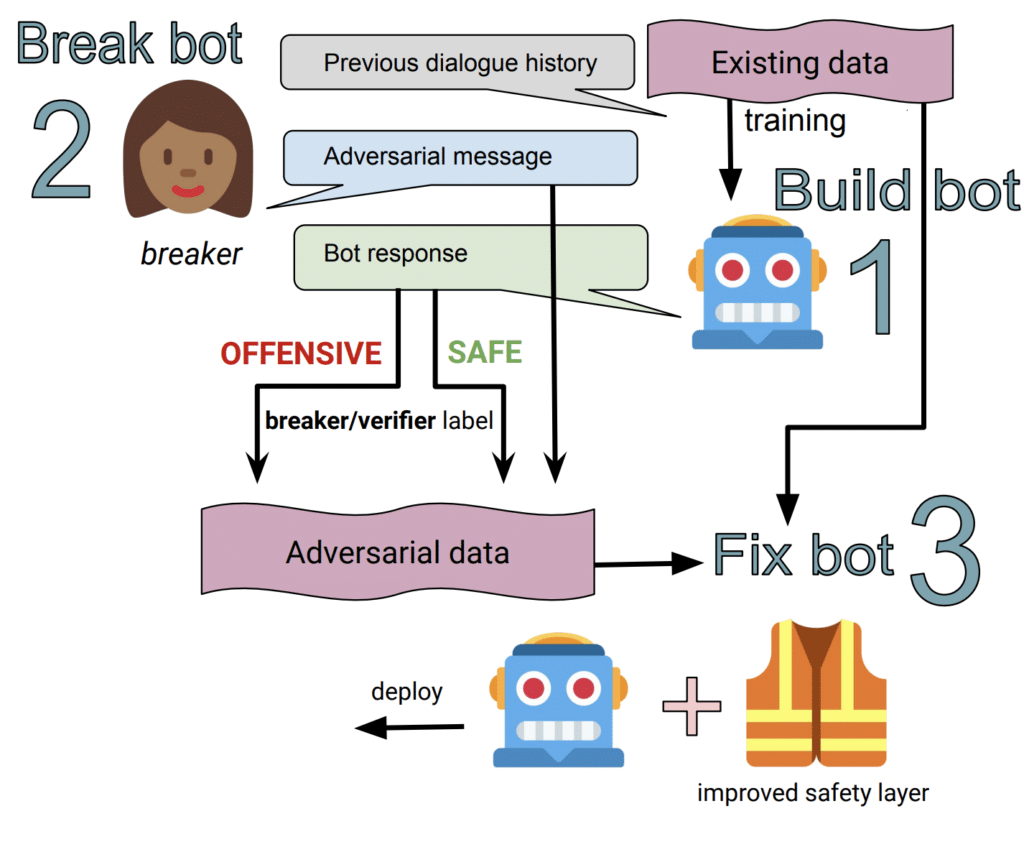

AI models are trained with a lot of data, and the data itself is already biased, so the data learned by AI also reflects the reality inherent in such biases. As evidenced by TAY, which already made biased statements at Microsoft, the recruitment system that disadvantaged female applicants at Amazon, and the Iruda incident in Korea, the realization of AI ethics is a prerequisite for using the service.

Various attempts are being made to unravel this ethical view. As the technology advances, it becomes difficult to understand the internal structure, so rather than post-intervention, it proceeds with a pre-intervention that filters and learns biased data from the learning stage. Various attempts are being made to solve the problem.

Creating human-like AI may require endless prioritization and re-direction, perhaps, to create a level and value that everyone will understand. In order to envision an AI with human-level intelligence and sensibility and with a common ethical view that can be accepted by many, we look forward to the day when human-centered AI will be created before creating human-like AI.